In 2025, the llms.txt standard will be a vital tool for website owners due to its creative approach to managing the interaction between AI systems and website content. As artificial intelligence systems like ChatGPT, Gemini, and Claude become more adept at crawling and analysing web content, controlling or managing content effectively is more important now than ever before. This new rule helps keep original content safe and high-quality by letting website owners control how AI bots can see, use, and handle their content.

What is llms.txt?

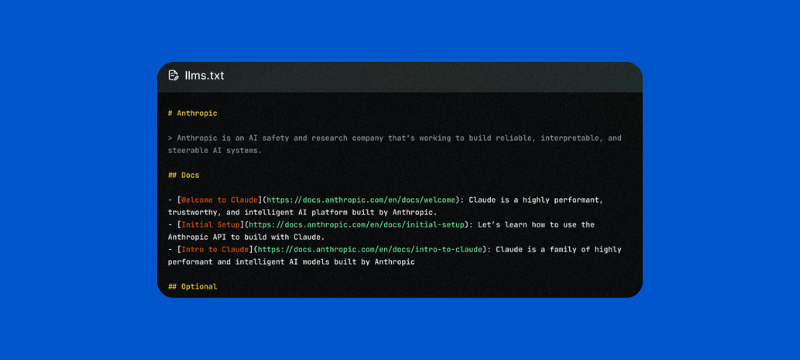

llms.txt is a simple text file that website owners put on their website’s root directory to give instructions to AI tools like ChatGPT or Gemini. It is like robots.txt (which talks to Google’s search crawlers), but this one is made just for AI systems.

The file tells AI tools three main things: what parts of your website they can read, what they can do with your content, and what rules they need to follow. It is written in basic text that’s easy for both humans and AI to understand.

More and more website owners are starting to use .llms.txt files, especially content creators and developers. However, the big AI companies are still working on adding full support for reading these files.

Why is llms.txt Useful?

llms.txt offers numerous benefits for website owners. Let us explore one by one:

- Preserves Content Integrity: Provides clear instructions to AI systems about which content should not be used for training or generation purposes, helping preserve the value of copyrighted information and creative works.

- Full Authority on AI Training Permissions: Allows website owners to specify exactly which parts of their site can be used for AI model training, guaranteeing sensitive or premium content remains protected.

- Improved Content Privacy: Enables website owners to designate private or confidential sections that should be omitted from AI processing, maintaining better control over data privacy.

- Enhanced AI Understanding: Helps AI systems better understand the context and structure of your content, leading to more accurate and relevant AI-generated responses about your site.

- Professional Communication: Provides a standardized and clear way to communicate with AI companies about content usage policies and licensing requirements.

llms.txt vs robots.txt vs sitemap.xml

Although all three files serve to communicate with automated systems, they have distinct purposes and audiences. “robots.txt” is designed for traditional search engine crawlers and focuses on basic “allow/disallow” commands for SEO purposes. “sitemap.xml” helps search engines understand your site structure and find all important pages for indexing. “llms.txt,” however, is specifically crafted for AI systems and offers context-aware instructions for content processing and training purposes.

| Feature | robots.txt | sitemap.xml | llms.txt |

| Purpose | Control search crawler access | Guide search engine indexing | Manage AI content usage |

| Who reads it | Search engine crawlers | Search engines | AI systems and LLMs |

| Format | Simple text commands | XML structure | Markdown with metadata |

| Control level | Basic allow/disallow | Page discovery | Granular AI usage rules |

Structure of an llms.txt File

Key Elements to Include

The llms.txt file follows a structured format that AI systems can easily study and understand. Here are the essential components:

- llm-agent: Specifies which AI tools or systems the rules apply to, allowing targeted control over different AI platforms.

- Disallow: Clearly blocks specific pages, directories, or content types from being accessed or used by AI systems.

- Allow: Grants permission for certain content to be used, even if broader restrictions are in place.

- Contact/Policy links (optional): Includes contact details and links to full AI usage rules so AI companies can reach out or read complete policies.

- Comments: Human-readable notes that explain the reasoning behind specific rules or provide additional context.

Here’s a simple example of an llms.txt file written in Markdown:

markdown

# My Website

> A comprehensive resource for web development tutorials and tools

## Content Guidelines

– Please respect our content licensing terms

– Contact us for commercial AI training usage

## Sections

### Documentation

– /docs/

– /tutorials/

– /guides/

### Restricted Content

– /private/

– /members-only/

– /premium/

llm-agent: *

Disallow: /private/

Disallow: /admin/

Disallow: /members-only/

Allow: /public-docs/

Allow: /tutorials/

# Contact for AI training partnerships

Contact: [email protected]

Policy: https://example.com/ai-usage-policy

# This content is available under a Creative Commons license

# Commercial AI training requires explicit permission

How to upload and use llms.txt on Your Site?

Follow the steps:

- Create the llms.txt file in a plain text editor like Notepad or VS Code, following the structure outlined above.

- Upload to your website’s root directory so it’s available at https://yourwebsite.com/llms.txt.

- Add a reference line in robots.txt (optional) by including User-agent: * followed by LLM-policy: /llms.txt.

- Examine using an AI crawler validator if available, or manually check that the file is accessible through a direct URL.

- WordPress users can use the File Manager plugin in your dashboard or upload through FTP to the “public_html” folder.

Crawlers vs LLMs: What’s the Difference?

How Search Engine Crawlers Work

Search engine crawlers obey robots.txt guidelines and concentrate on SEO-relevant content as they carefully scan websites to index content for search results. They mainly assist consumers in finding relevant information through search queries and obey to ranking restrictions.

How LLMs Work

Web content is accessed by large language models for training, and they may retain knowledge to produce new content depending on patterns they have discovered. Content control is particularly important since, in contrast to search crawlers, LLMs are able to reproduce, change, and create new content using the information they have processed.

Large Language Models access web content for training purposes and may store information to generate new content based on patterns they have learned. Unlike search crawlers, LLMs don’t just index content; they can reproduce, transform, and generate new content using the information they have processed earlier, making content management more important.

FAQs:

- What is the difference between llm.txt and llms Full txt?

llm.txt refers to the basic llms.txt file format, while llms Full txt typically refers to complete executions that include extended metadata, detailed usage policies, and more detailed control options. - What is LLMS used for?

Large Language Models are used for natural language processing tasks, including text generation, translation, summarization, question answering, and conversational AI applications. - Does llms.txt work with all AI tools?

Currently, llms.txt support differs by AI platform. While the standard is gaining adoption, not all AI tools have implemented full support for llms.txt directives yet. - How do LLMs generate text?

LLMs generate text by predicting the most likely next word or phrase based on patterns learned from training data, using complex neural networks to understand context and maintain consistency. - How do llms understand text?

LLMs understand text through transformer architectures that process words concerning their context, creating mathematical representations that capture meaning, relationships, and patterns in language. - What programming language do llms use?

Most llms are built using Python with frameworks like PyTorch or TensorFlow, though the models themselves operate on mathematical transformations rather than traditional programming logic.

Conclusion

The llms.txt standard is a noteworthy advancement in 2025, allowing website owners to define how AI systems interact with their content. As artificial intelligence continues to evolve and reshape digital experiences, having a clear and structured way to protect your online content is more important than ever.

At bodHOST, we believe in staying ahead of emerging technologies by supporting responsible AI practices. By implementing llms.txt, you not only protect your digital assets but also contribute to a more transparent and ethical AI ecosystem.

Learn more in our detailed article: AI-Powered Webpage Optimization: What It Is and How to Use It